While about 150 frames were successfully rendered out via the renderfarm, Ladji had finished texturing the seaforts with higher resolution textures, thus we killed the ongoing render and set up another one with Ladji's textures. However, the jobs began to fail continuously at this point despite the fact that we set up everything correctly.

As a result, we decided to break down our scenes and test out the few major elements, which needed to be calculated heavily by the machines and might have caused the failure. These elements including, Global Illumination and Final Gathering with HDRI lighting, apart from this we also did a separate test for the Ocean Shader itself just to be sure.

Ball Test Renderfarm from Reno Cicero on Vimeo.

This is a simple test of a ball and a plane with blinn textures applied and a spot light to see if the renderfarm is working.

OceanShader_Renderfarm Test from Reno Cicero on Vimeo.

This is the test for the Ocean Shader just to see if the renderfarm is capable of rendering this element in a scene.

GI_FG_Renderfarm Test from Reno Cicero on Vimeo.

With the ocean, we lit the scene with HDRI lighting and placed two objects with reflectivity and refractivity in order to test that if the renderfarm is capable of rendering a scene with Global Illumination and Final Gathering in it.

After a series of tests of various rendering elements with the Renderfarm, we suspected the problem might be the resolution of Ladji's textures being too high as the result of the 'Render Diagnostic' shown above.

Above image is the interface of the software 'Qube' which accesses us to the renderfarm.

The college does provide the mac version of the software on the college server 'Vanguard' but all our attempts resulted in failures. This is the reason why we were usually working in the prototyping room at seventh floor in college, where a dozen of PC computers installed with the software are located. However, the computers are old and inefficient, also there were often problems with the connection to the college network. This drastically increased the level of frustration and time consumption to the rendering process.

As a result, we decided to render out the scene with the low resolution textures that Jure did for the seaforts previously and imported all the other scene elements into Jure's scene file of seaforts. The render came out successfully and aided us tremendously in terms of improving the scene, especially the camera path. This render came out within a day, this proves the efficiency of the renderfarm when it is working correctly.

Second Test Render from Reno Cicero on Vimeo.

After the first render, Reno added fog and clouds into the scene and changed the colour of the ocean attempting to create an atmospheric look that feels more appropriate to our theme of decay. Also we improved the camera path with a few more interesting shots and angles on the path. I also experimented more with the scale of 'Global Illumination' and 'Final Gathering' in order to improve the lighting.

When the render came out we felt that the colour of the ocean was too red. The lighting was brighter than how we intended it to be, this consequently changed the colour of clouds as they change according to the lighting. Fog seemed to be a bit too flickery, but that's due to the camera movement, so we thought it was inevitable.

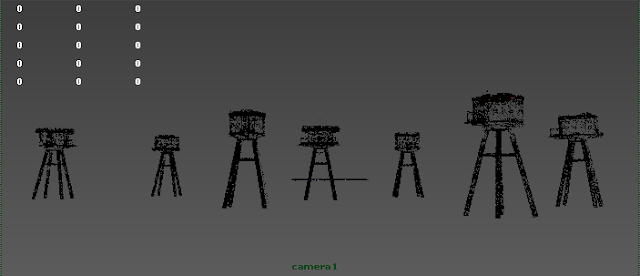

At this point we also realised resolutions of the textures we used for the seaforts were too low, the images thus look very rough and unrealistic when the camera approaches the models.

Although the second render was a success, it was at this point that the renderfarm's processers started to cease functioning one by one. As a result, the render time increased tremendously and eventually took about four days to complete. Reno and I spent a lot of time trying to work out what was happening to the renderfarm. We tried connecting to the renderfarm through our laptops as well as the PC computers at the seventh floor. Both failed due to our minimal level of knowledge to the renderfarm. We then spoke to some of our tutors and realised that it was the technical side of the renderfarm that is causing the problems, they thus recommended us to send a report through JIRA so the technicians will be able to see the problems officially and deal with them.

We learned to set up the render layers in order to give us maximum level of controls over colour grading and compositing of the scene. When Simon from IT finally came back from holiday, he restarted all the processors and updated some firmwares to keep the renderfarm up and running. We then happily placed our scene in the renderfarm. As we stayed in college all night making sure that everything runs smoothly we were convinced that we were going to get our first scenes rendered out nicely in layers. However, we were wrong.

When we came back second day in the morning, what awaited us was a failed render that ran for approximately seven hours long. That's when we realised the level of sensitivity between the settings inside Maya and the settings in the renderfarm.